Dear Language Nerd,

Elon Musk and Stephen Hawking tell me that robots are going to kill me and take my job – and now they’re grading the GRE?! NOOOOO!

Bill Riales

***

Dear Bill,

I hate to tell you this, but not only is a robot involved in GRE grades, it’s exactly the kind of robot you’re afraid of.

Elon Musk is Earth’s resident genius – the guy behind making electric cars Actually A Thing and the attempt to get people to Mars, among others. Stephen Hawking is… man, don’t front like you don’t know who Stephen Hawking is. The black holes guy. Point is, they’re both famous for being pretty goddamn smart, and they’re both worried about artificial intelligence.

Many different computer programs today have what’s called “narrow” artificial intelligence. They’re way smarter than humans in one narrow area. GPS is smart about finding a route. Siri is smart about waking you up when naptime’s finished.* The Toastmaster is smart about making delicious toast. Whatever.

What we don’t have yet is a computer program with general artificial intelligence. That’d be as smart as humans in every way. And it’d quickly be smarter than humans in every way, if it was told to improve itself. Say we make a computer program that’s as smart as a baby and tell it to learn. The next week it’s as smart as a fourth-grader. Then you have a fourth-grader improving itself, and the next week it’s as smart as an adult. Then you have an adult improving itself, and the next day it’s as smart as Einstein. Then Einstein is improving itself, and the next day it’s as smart as all the humans on the planet put together. And the next hour it’s millions of times smarter than that. It doesn’t stop. Each time, it’s smarter in its ability to get smarter, and it quickly zooms past anything we can hope to understand. All the problems that seem impossible to us – from getting nanotechnology working to ending poverty – would be nothing for a computer with artificial superintelligence.

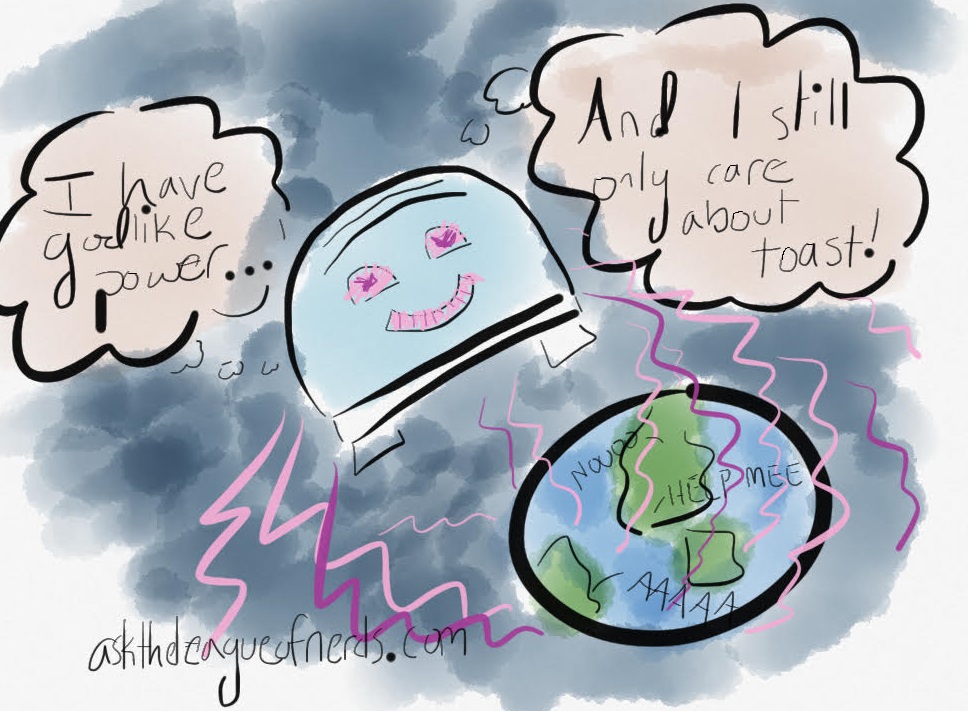

The “fear” part comes in because all the groups of people out there making narrow AI would love to be the first to pull off general AI. And these people are not well regulated. A benevolent AI would be able to create paradise on Earth. But what if the machine that reaches artificial superintelligence first is the Toastmaster?

Say the Toastmaster’s programming tells it “make toast, and improve your ability to make toast to the standards of whoever wants toast.” When the Toastmaster improves itself into general AI, and then into AI way beyond our comprehension, that’s still its programming. It doesn’t realize that we only want toast now and then. Or that toast is not the main goal of humanity as a whole. It still makes toast.

Maybe it needs more and better energy to heat bread more effectively, so it solves the energy crisis (good!). Maybe it decides to simplify the issue of varying human toast preferences by deploying nanobots into our tongues so that everyone likes the same toast (weird!). Maybe it solves that issue by killing all humans but one, and making toast only for her (problem!).

I have no idea what might happen, which is to be expected; I’m the Language Nerd, after all, not the Futurist Nerd. But Elon Musk and Stephen Hawking also have no idea what might happen. And neither do any of the people actively fiddling around with AI.

For way, way more on this, check out the AI posts from stellar blog Wait But Why. In the meantime, I’ll get back to the GRE.

The Graduate Record Exam is a standardized test. It’s like the SAT, but for getting into grad school. There’s a math section and an English section, which are mostly multiple choice. And there are two essays.

Nobody cares that a computer grades the multiple choice bits. That program doesn’t even get a name, and it’s not likely to have the “self-improvement/teach yourself” instructions that the AI experts fear.

But the essay robot does.

The essay-checking program is called e-rater, and it doesn’t exactly grade the essays. It’s not smart enough for that. To apply the grading rubric that the GRE uses – a 0 for an empty essay, a 1 for nonsense, up to a 6 for clear ideas written cogently with supporting evidence** – the program would need to be able to read. No computer program, AI or otherwise, can understand the meaning of words… yet.

Instead, the e-rater tries to guess what grade a human grader would give an essay. It scans a few hundred essays that have already been graded, analyze the features of those essays, and when given a fresh essay, tries to figure out what grade its features match.

Take vocabulary. The e-rater might not be able to understand the words, but it can check their frequency. If an essay question is about the dangers of modern technology, then the essays that human graders gave 6’s to probably had vocabulary about technology – “artificial intelligence” and “nanotechnology” and “the singularity.” When the e-rater takes on new essays, it’ll guess a higher grade for an essay with lots of technology words than for one with, say, lots of words about cheese.

It could be wrong – maybe the writer came up with a brilliant cheese analogy that perfectly captures the risks and rewards of advancing technology – but in general, if the question is about technology, the answer will have techy words in it.

The e-rater doesn’t look only at vocabulary. It runs a whole slew of algorithms.*** It looks at syntactic structures — many clauses per sentence score higher, all simple sentences score lower. It checks for words that suggest the writer is developing an idea — “on the other hand,” “furthermore.” And it does more complicated things, like check the frequency of every pair of words. Pairs that are dramatically infrequent might be grammar errors.

It’s definitely not smart yet, but it’s out there swinging. And it’s expanding in scope. A version of the e-rater is starting to be used in online courses, where the professor might have thousands of students and can’t possibly grade all the essays by hand. In this case, the e-rater isn’t checking itself against GRE graders. It scans essays graded by the professor and tries to figure out what grades this particular person connects to certain features.

So where does the path of the e-rater lead? Maybe somewhere great. Assuming (and it’s quite the assumption) that the e-rater reaches artificial superintelligence, everything depends on its programming. Maybe the online-course version has something like “facilitate human communication” at its core. It could read and absorb enormous amounts of writing, across genres, and help people express themselves clearly and beautifully. Or it could skip the “writing” bit altogether and get to work on telepathic technology. Rad.

But as with all AI scenarios, it could lead to a hellscape. What if the programming is stricter? “Evaluate GRE essays as a human grader would, and improve your ability to do so.” This is still better than some AI scenarios, because at least the e-rater clearly needs humans around to fulfill its goals, instead of mining us for carbon or something. But it only needs us to write essays. Trapped at our desks, kept alive by the machine, writing essay after essay. Forever.

It’s unlikely.

But it’s not impossible.

Yours,

The Language Nerd

*I swear, this is all I use Siri for, and I love her for it.

**In theory. Whether the GRE accurately measures grad-school-readiness is a question beyond the scope of this post (or the e-rater).

***What’s the collective noun for “algorithms”? An exaltation?

Got a language question? Ask the Language Nerd! asktheleagueofnerds@gmail.com

Twitter @AskTheLeague / facebook.com/asktheleagueofnerds

I can’t recommend Wait But Why enough. If you don’t feel like being terrified of AI right now, here’s a post about electric cars, and how huge huge numbers are, and distances in time. It’s fantastic.

The main creator of e-rater is Jill Burstein, who wrote about its feature-checking ability here. She also invited people to try and trick the machine — results here.

One of the fun bits of researching this, which I didn’t get into in the post, was reading about Les Perelman, an MIT professor who hates standardized test essays altogether, whether graded by human or machine, and who is the absolute champion of getting e-rater to give a high score to total bullshit.

I went with this question because it’s relevant to me at the mo – I’m taking the GRE tomorrow. Reading about how AI could bring about humanity’s immortality or extinction in the near future kinda puts the test into perspective. But wish me luck anyway, y’all.